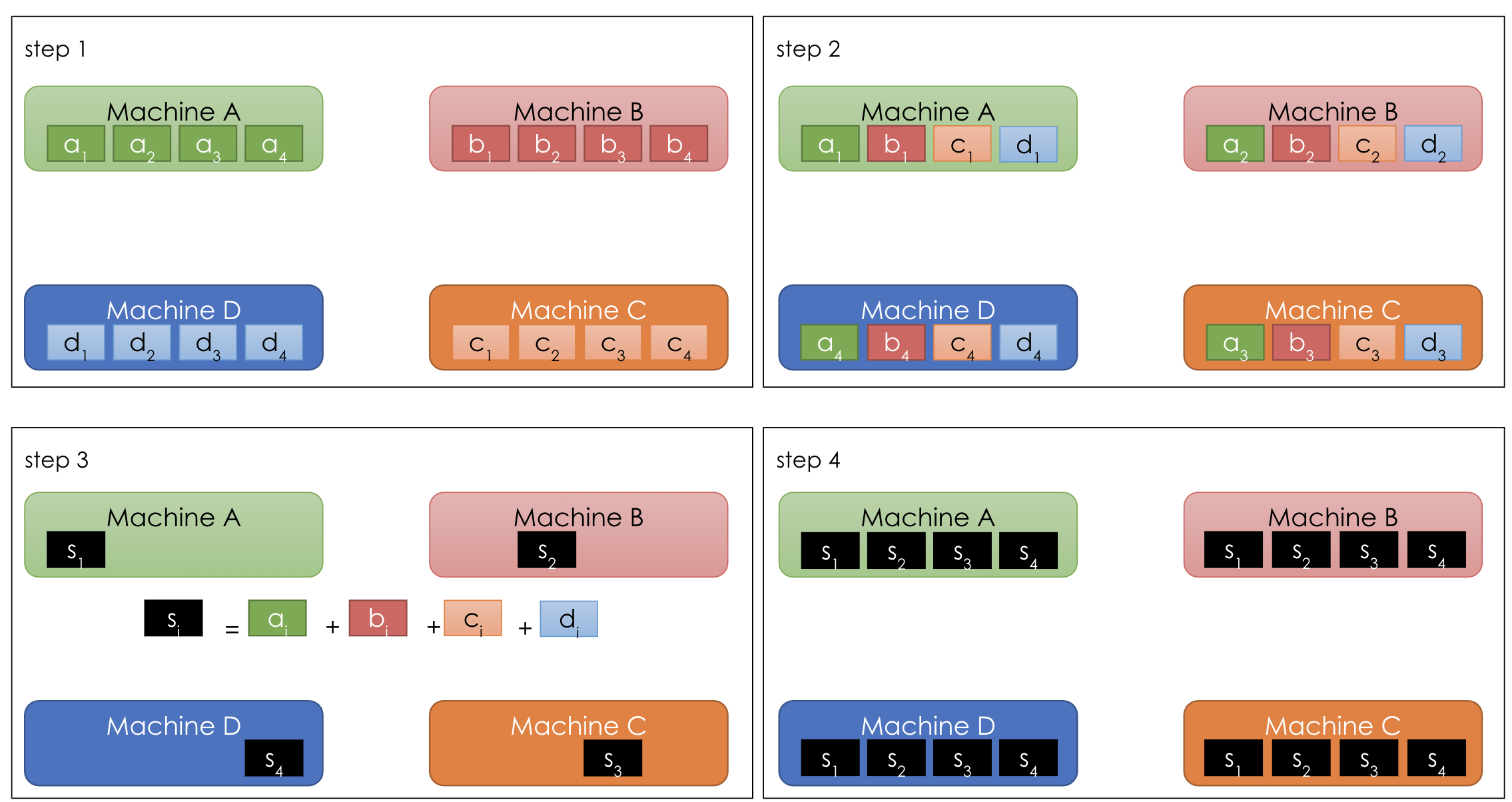

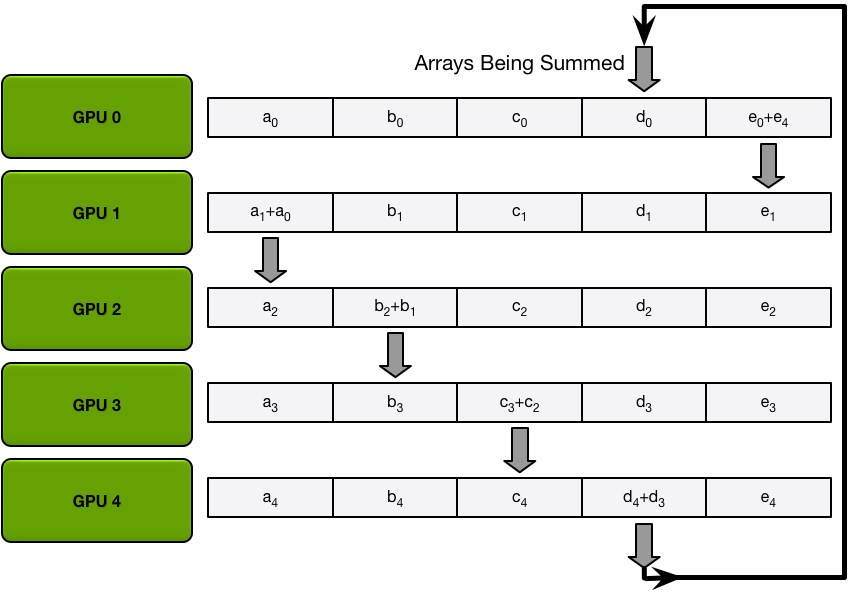

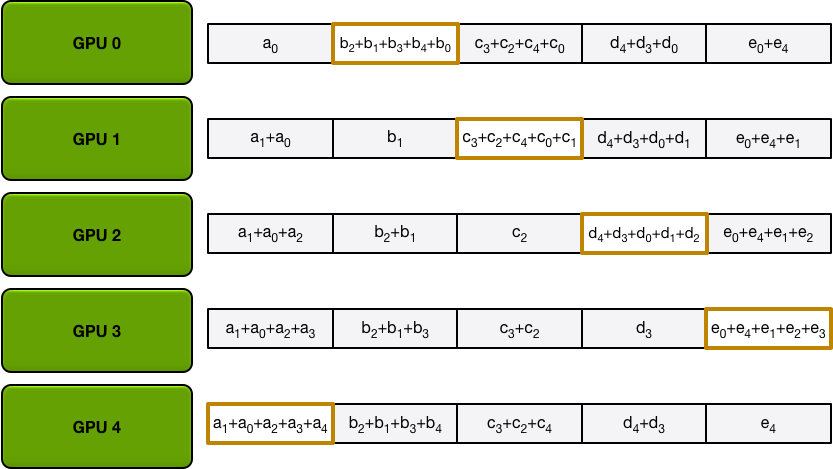

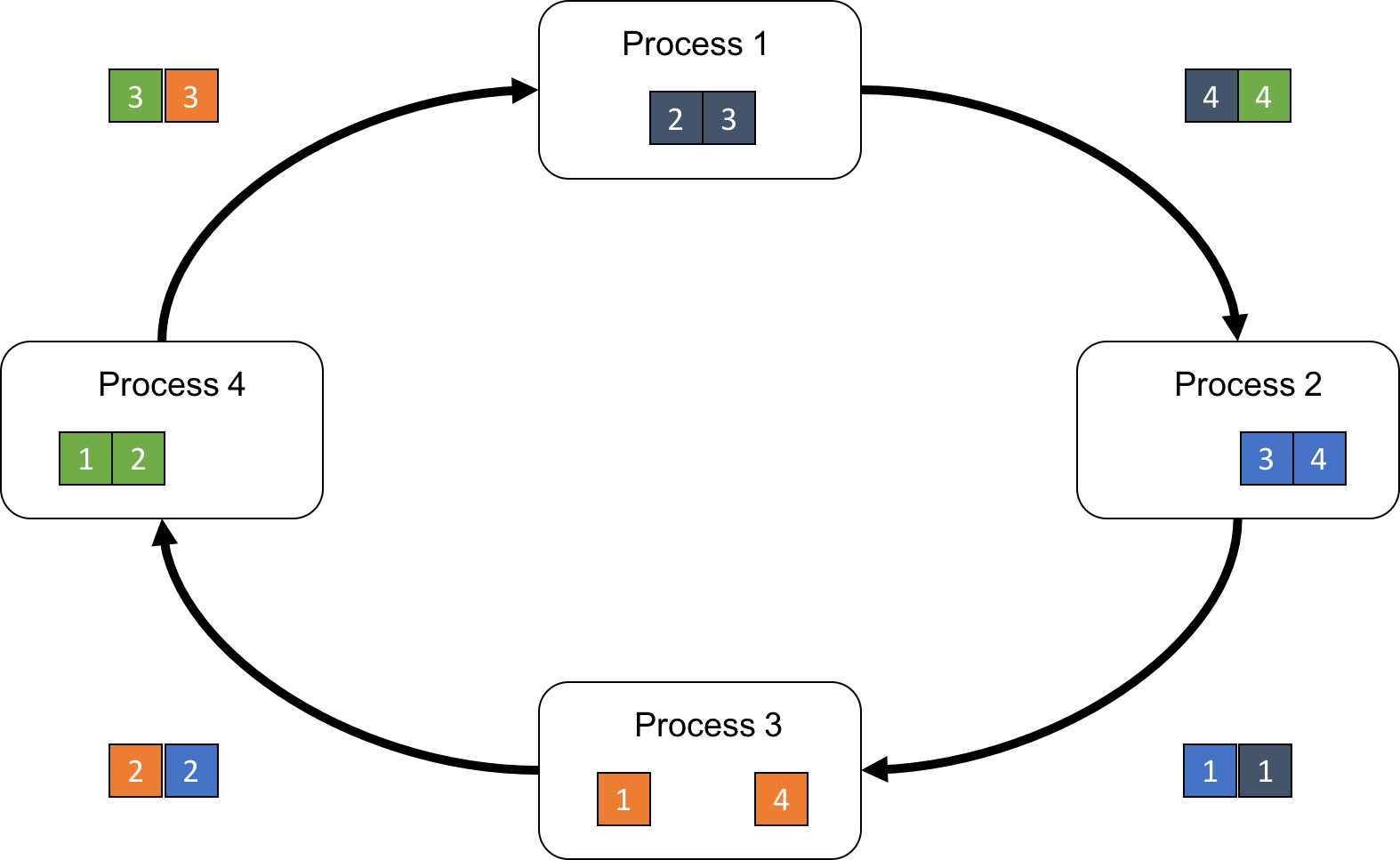

A three-worker illustrative example of the ring-allreduce (RAR) process. | Download Scientific Diagram

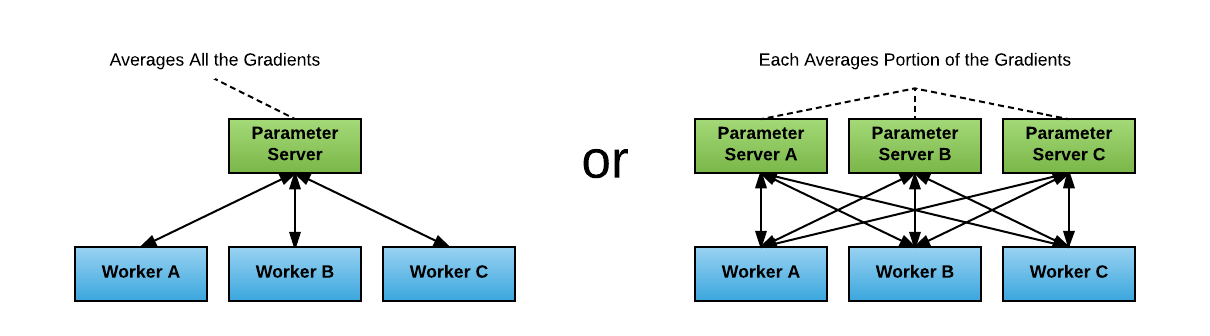

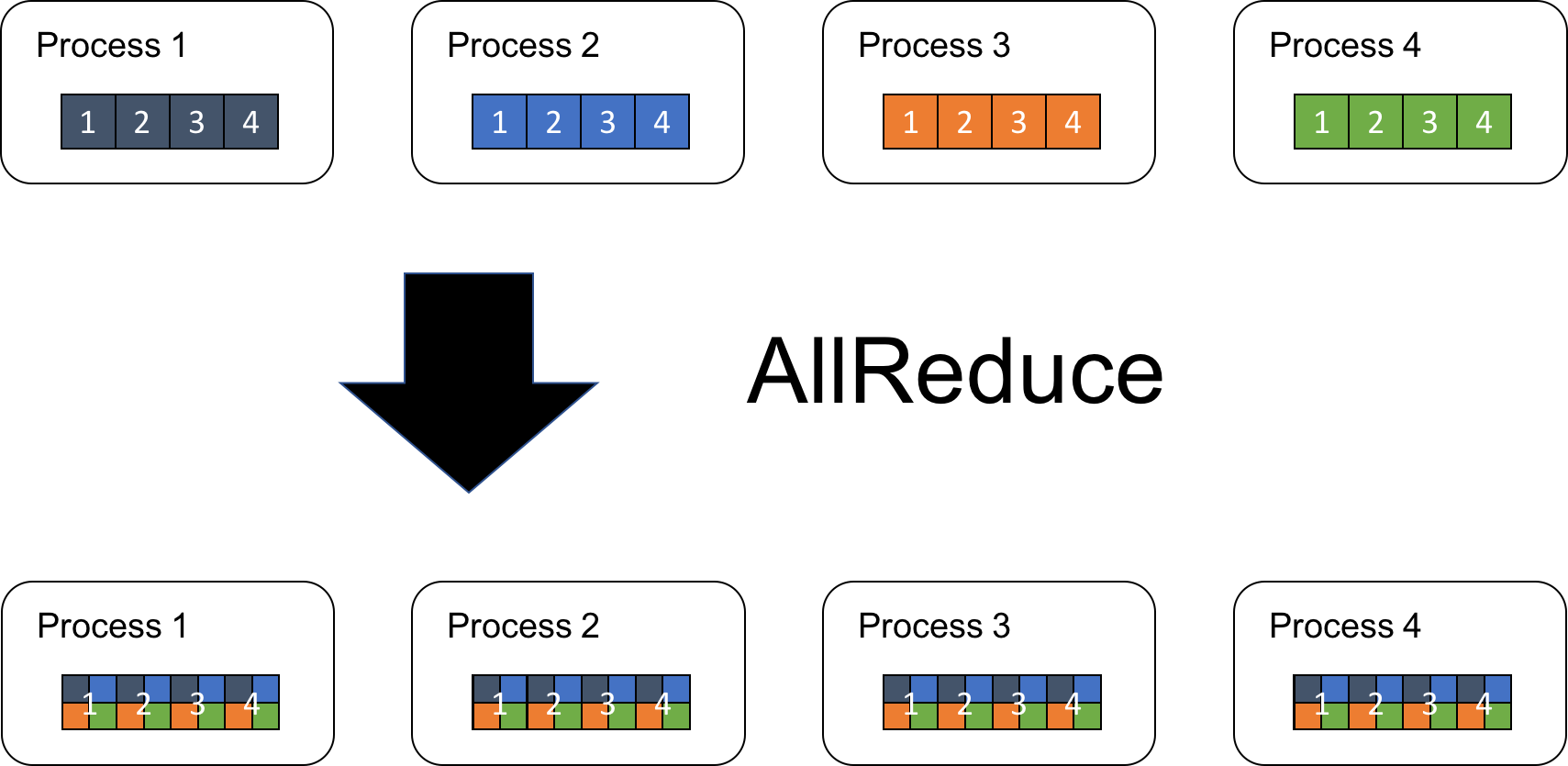

Technologies behind Distributed Deep Learning: AllReduce - Preferred Networks Research & Development

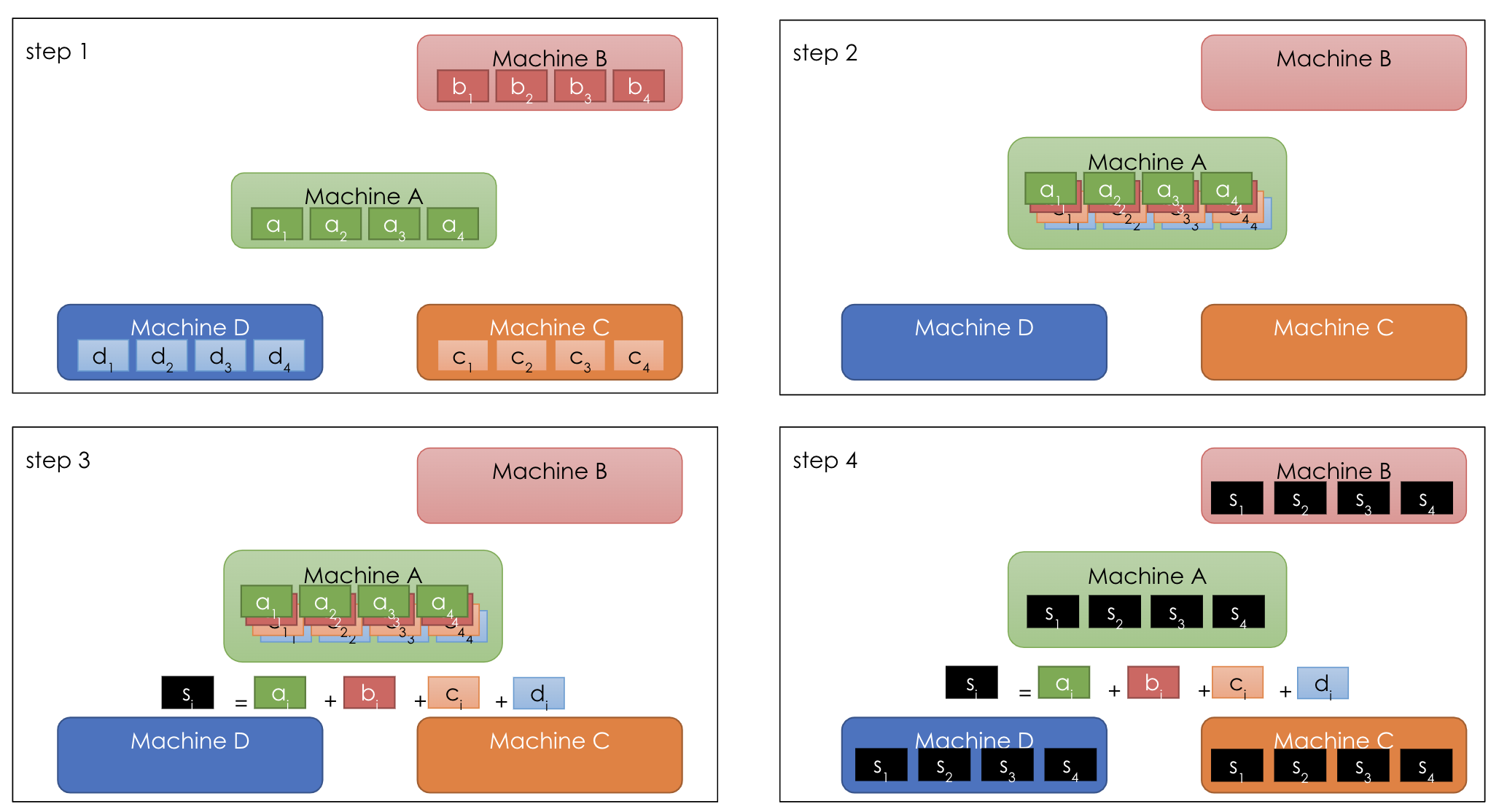

Training in Data Parallel Mode (AllReduce)-Distributed Training-Manual Porting and Training-TensorFlow 1.15 Network Model Porting and Adaptation-Model development-6.0.RC1.alphaX-CANN Community Edition-Ascend Documentation-Ascend Community

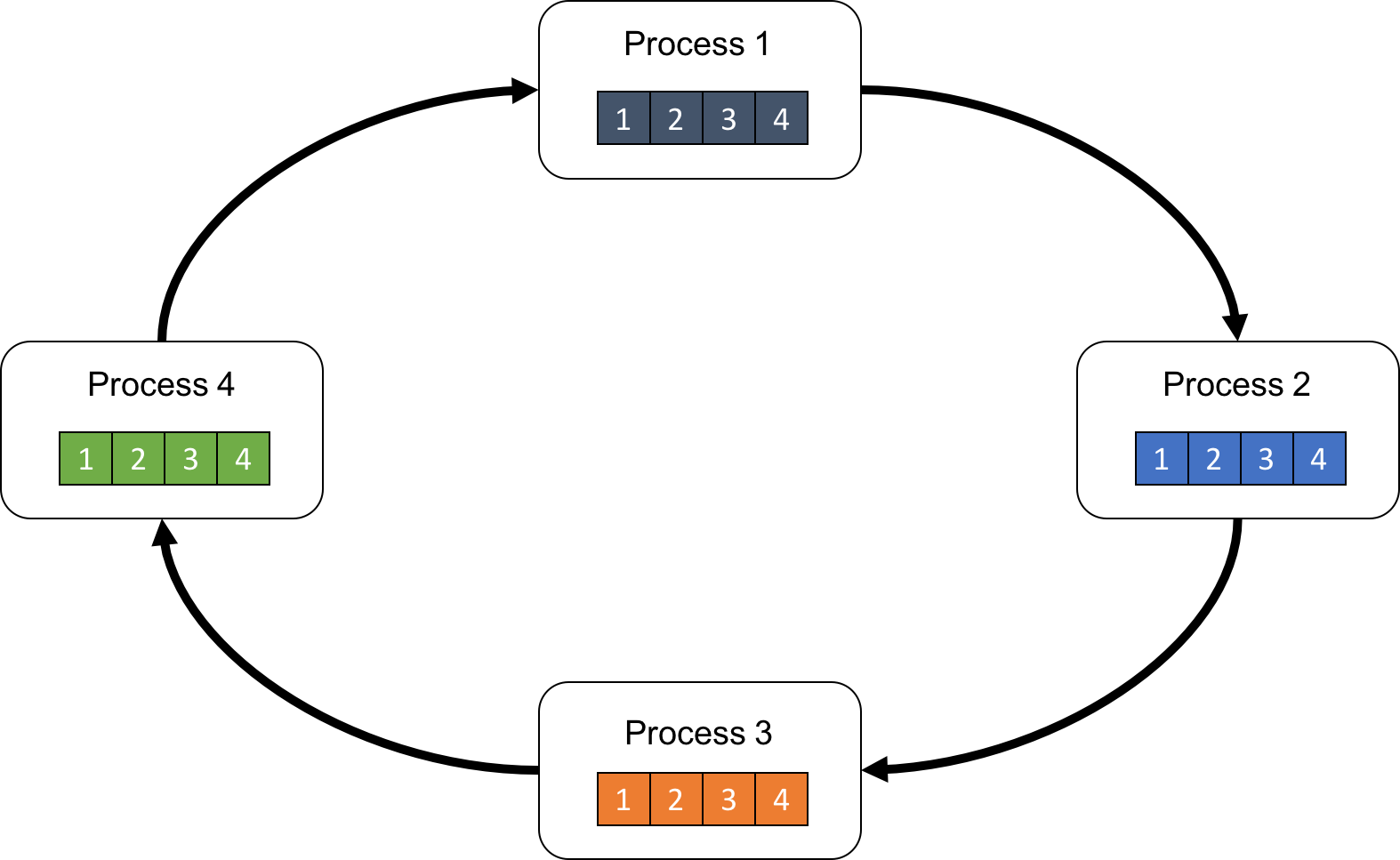

Technologies behind Distributed Deep Learning: AllReduce - Preferred Networks Research & Development

Training in Data Parallel Mode (AllReduce)-Distributed Training-Manual Porting and Training-TensorFlow 1.15 Network Model Porting and Adaptation-Model development-6.0.RC1.alphaX-CANN Community Edition-Ascend Documentation-Ascend Community

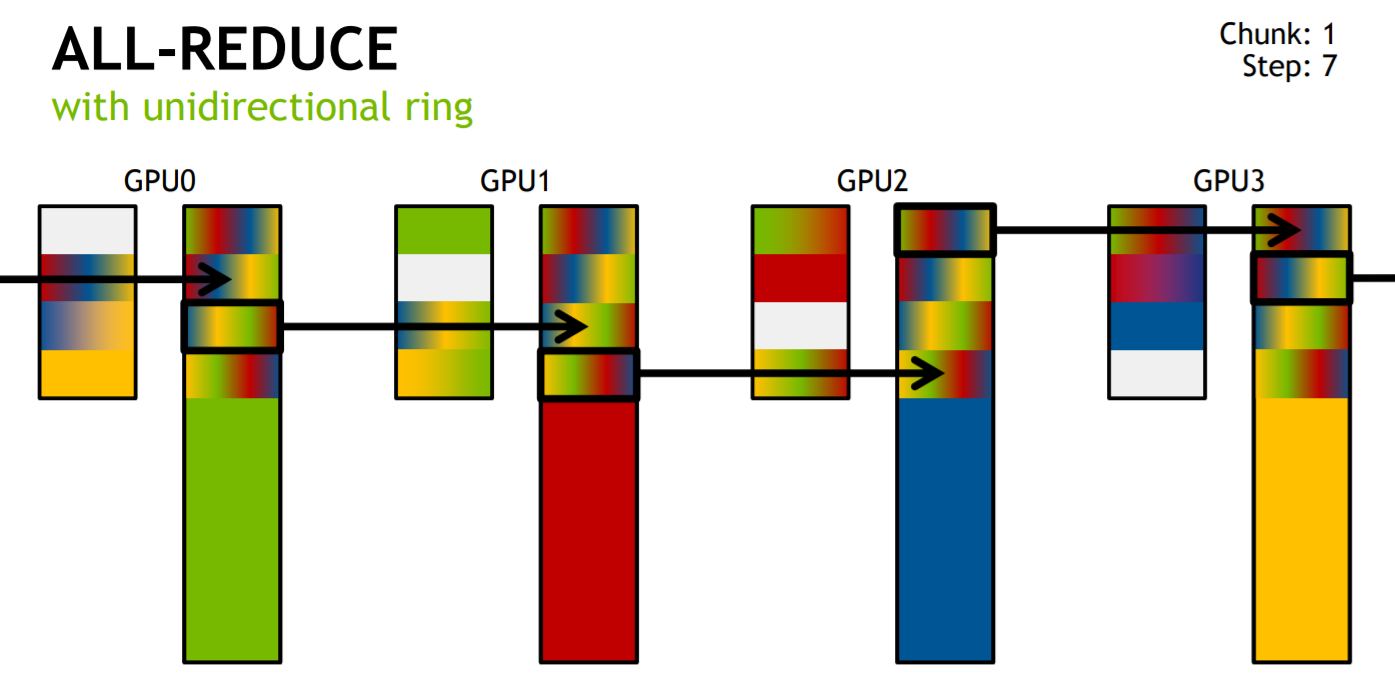

Baidu Research on Twitter: "Baidu's 'Ring Allreduce' Library Increases #MachineLearning Efficiency Across Many GPU Nodes. https://t.co/DSMNBzTOxD #deeplearning https://t.co/xbSM5klxsk" / Twitter

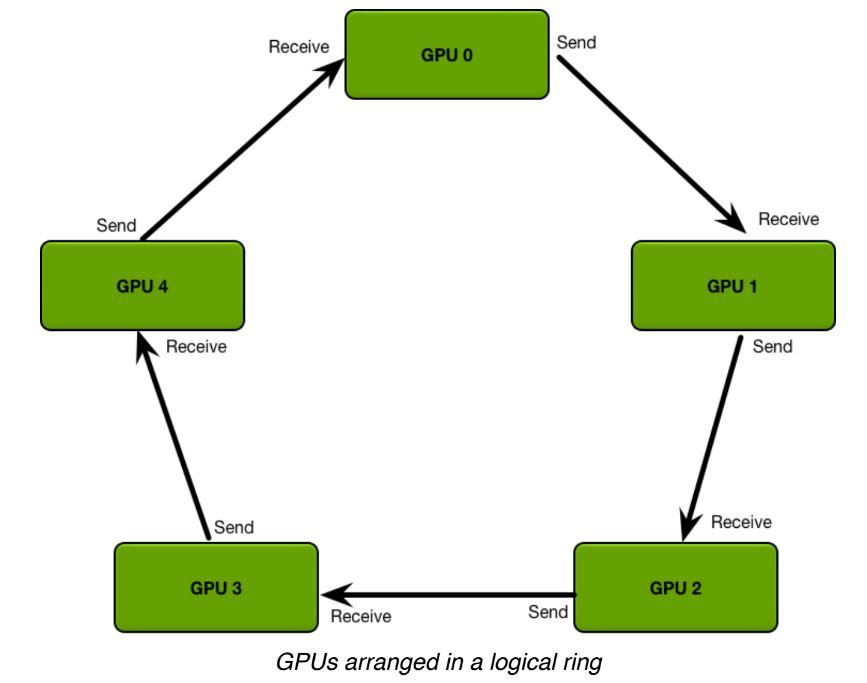

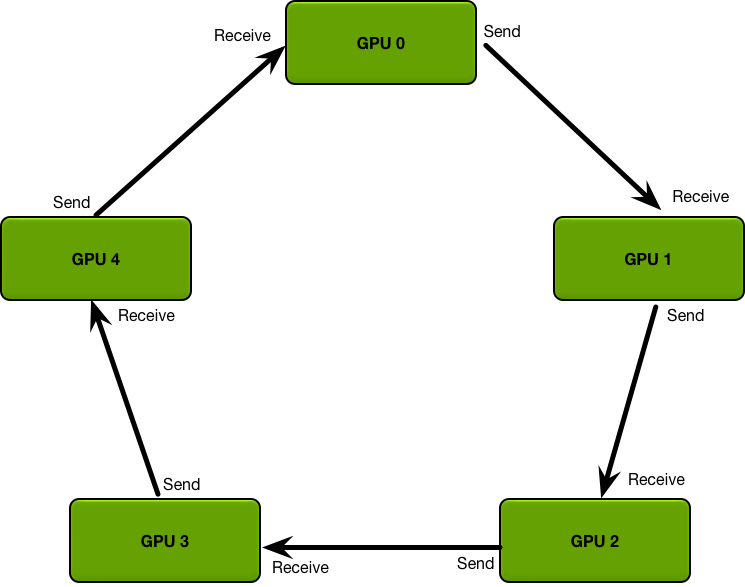

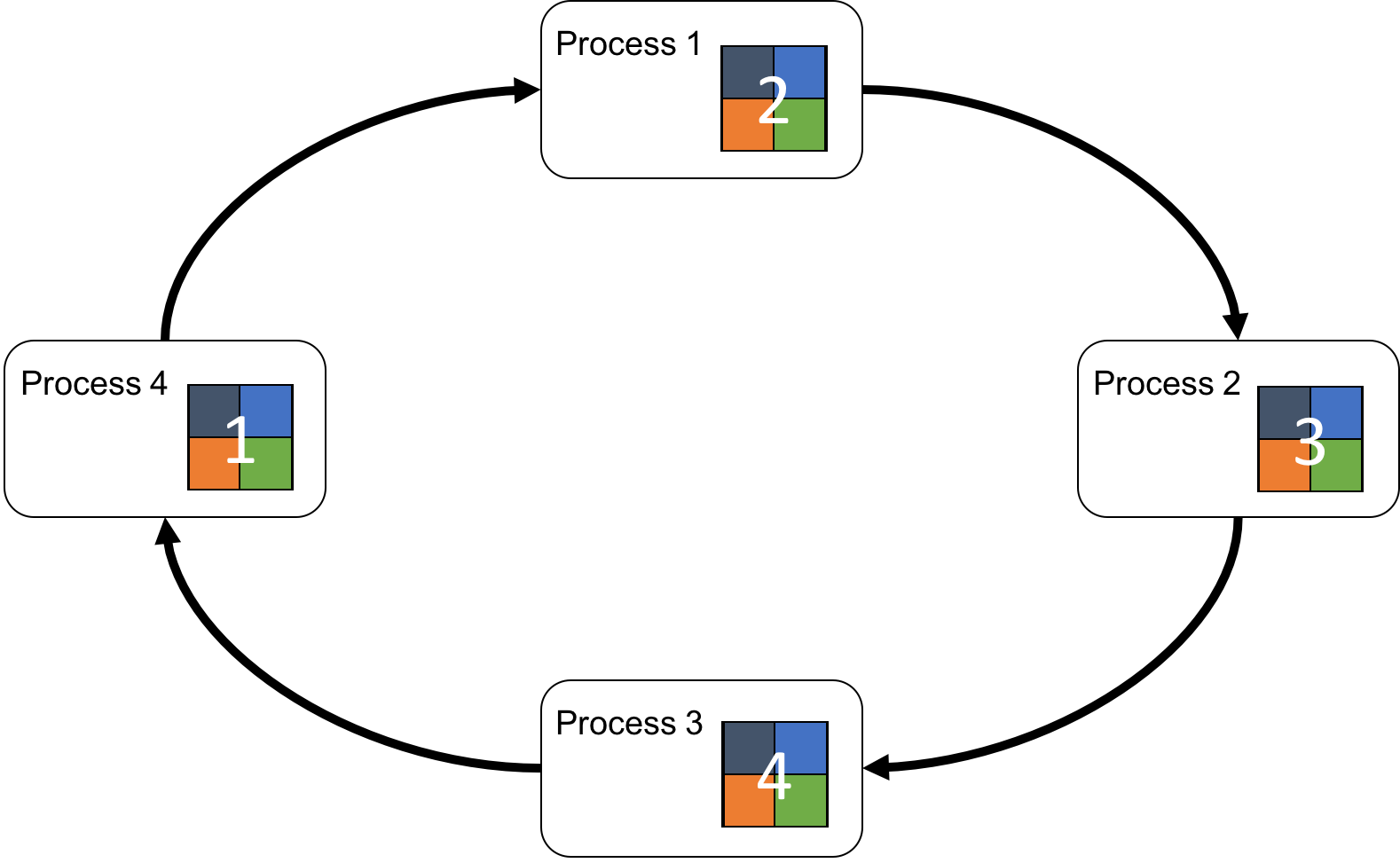

Ring-allreduce, which optimizes for bandwidth and memory usage over latency | Download Scientific Diagram

Technologies behind Distributed Deep Learning: AllReduce - Preferred Networks Research & Development

Technologies behind Distributed Deep Learning: AllReduce - Preferred Networks Research & Development

Efficient MPI‐AllReduce for large‐scale deep learning on GPU‐clusters - Thao Nguyen - 2021 - Concurrency and Computation: Practice and Experience - Wiley Online Library